What is Long Short-Term Memory?

Long Short-Term Memory (LSTM) is a popular type of Recurrent Neural Network (RNN) that has been widely used in various Artificial Intelligence (AI) applications such as natural language processing, speech recognition, image captioning, and time series forecasting. Unlike traditional RNNs that suffer from the vanishing gradient problem, LSTM can effectively handle long-term dependencies by utilizing memory cells and various gates to control the flow of information.

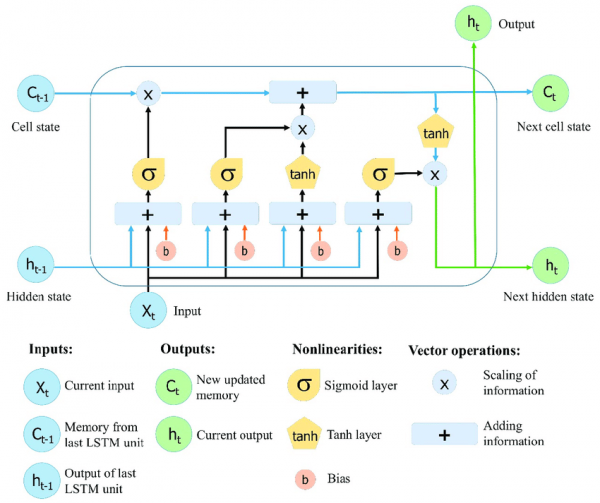

The key idea of LSTM is to introduce a memory cell that can store information for a prolonged period and selectively forget or update information when necessary. The memory cell is connected to three gates, namely input gate, forget gate, and output gate, that regulate the information flow. The input gate decides how much new information should be added to the cell, while the forget gate determines how much old information should be removed. Finally, the output gate controls how much information from the cell should be exposed to the next layer.

General look

In practice, LSTM is implemented as a sequence of repeating units that process one input element at a time and update the hidden state and memory cell. The output of the last unit can be used for the final prediction or fed into a fully connected layer for further processing. The training of LSTM can be done using backpropagation through time, a variant of backpropagation that takes into account the temporal structure of the data.

Code Example

Here is an example code that uses LSTM to predict the next value in a sine wave:

import numpy as np

import matplotlib.pyplot as plt

from keras.models import Sequential

from keras.layers import LSTM, Dense

# Generate sine wave data

data = np.sin(np.arange(1000) * 2 * np.pi / 50)

# Split data into input and output sequences

look_back = 10

x = []

y = []

for i in range(len(data)-look_back-1):

x.append(data[i:(i+look_back)])

y.append(data[i+look_back])

x = np.array(x)

y = np.array(y)

# Reshape input data to [samples, time steps, features]

x = np.reshape(x, (x.shape[0], x.shape[1], 1))

# Create LSTM model

model = Sequential()

model.add(LSTM(4, input_shape=(look_back, 1)))

model.add(Dense(1))

model.compile(loss='mean_squared_error', optimizer='adam')

# Train the model

model.fit(x, y, epochs=100, batch_size=1, verbose=2)

# Use the model to predict the next value

test_input = data[-look_back:]

test_input = np.reshape(test_input, (1, look_back, 1))

test_output = model.predict(test_input, verbose=0)

print("Predicted value:", test_output)

In this code, we first generate a sine wave and split it into input and output sequences of length 10. We then reshape the input data to have the dimensions [samples, time steps, features] expected by the LSTM layer. Next, we create an LSTM model with one hidden layer of four neurons and a dense output layer with one neuron. We compile the model with mean squared error loss and the Adam optimizer and train it for 100 epochs. Finally, we use the model to predict the next value in the sine wave based on the last 10 values.

Conclusion

In conclusion, Long Short-Term Memory (LSTM) is a powerful type of Recurrent Neural Network that can capture long-term dependencies and has been successfully used in various AI applications. By introducing a memory cell and various gates to regulate information flow, LSTM can effectively handle sequential data and produce accurate predictions. The example code demonstrates how to use LSTM to predict the next value in a sine wave, but the same approach can